A New Frontier of AI-Powered DAOs

Over the past decade, Decentralised Autonomous Organisations (DAOs) have grown from small experimental collectives into powerful financial entities. Many now control treasuries worth tens or even hundreds of millions, with the ability to fund ecosystems, back projects, or shift liquidity across markets in seconds.

The natural next step is AI-powered DAOs: autonomous agents that can propose, vote, and even execute treasury decisions without constant human intervention. Imagine an AI agent dynamically reallocating a community’s treasury into stable assets during market turbulence, or funding ecosystem projects based on real-time performance metrics.

The appeal is obvious: faster reaction times, lower operational overhead, and decision-making that doesn’t get bogged down in human bottlenecks.

But so is the risk. If a DAO’s treasury is effectively governed by an AI agent, who ensures its decisions are aligned with the DAO’s charter? How do stakeholders verify that allocations are made in good faith rather than drifting into opaque or exploitable behaviours?

This is where the debate shifts from can AI manage treasuries? to should AI manage treasuries — and under what conditions?

Why Current Approaches Fall Short

Recognising the risks, several projects have started tackling the auditability problem. Platforms like Olas and Kite promote the idea of reasoning-traceable agents: AIs that don’t just act, but also leave behind a trail of logic explaining why they acted the way they did.

On the surface, this is a huge improvement over traditional black-box systems. Instead of a statistical model simply outputting a decision, these frameworks attempt to reconstruct the reasoning path in the form of graphs, symbolic steps, or explanatory logs. For a DAO treasury, that could mean being able to look back and see why an allocation was made.

Yet the issue is deeper. These reasoning traces are almost always retrofitted scaffolds layered over fundamentally probabilistic engines. The AI may produce a justification that looks coherent, but the core process remains opaque — a statistical guess whose logic is explained after the fact, not during.

This creates three serious problems for treasury governance:

- Illusion of transparency – the reasoning logs may be human-readable, but they don’t always reflect the true inner workings of the model.

- Fragility at scale – as decisions multiply, retrofitted reasoning becomes harder to audit with confidence, especially if different agents are stitching together complex financial moves.

- Trust bottleneck – stakeholders are still left having to “trust” the wrapper, rather than being able to independently verify the underlying decision process.

For small-scale experiments, symbolic wrappers may be enough. But when millions in assets and the credibility of decentralised governance are at stake, these shortcomings turn from technical quirks into existential risks.

The Non-Negotiable Guardrail: Auditability

When an AI agent is trusted with treasury powers, speed is optional — auditability is not.

No DAO can claim credible governance if its stakeholders cannot trace why decisions were made. Every action — whether shifting assets into stablecoins, approving a grant, or reallocating liquidity — must carry a reasoning trail that is:

- Immutable on-chain — once recorded, it cannot be altered or erased.

- Human-readable — explanations that stakeholders, auditors, and regulators can understand without needing to reverse-engineer a black-box model.

- Deterministically traceable — the output must directly reflect the actual state transitions inside the system, not a post-rationalised explanation bolted on afterwards.

Without this guardrail, a DAO’s treasury is governed not by verifiable rules but by faith — faith that the AI is acting in alignment, faith that logs are accurate, faith that drift won’t creep in over time.

And history shows what happens when finance runs on faith alone.

In decentralised systems, where “don’t trust, verify” is the guiding ethos, auditability is the one guardrail that cannot be compromised.

Qognetix: Building Auditability Into the Substrate

Most approaches try to make AI explainable by adding layers of scaffolding on top of existing black-box engines. At Qognetix, we start from the opposite direction: auditability is built into the substrate itself.

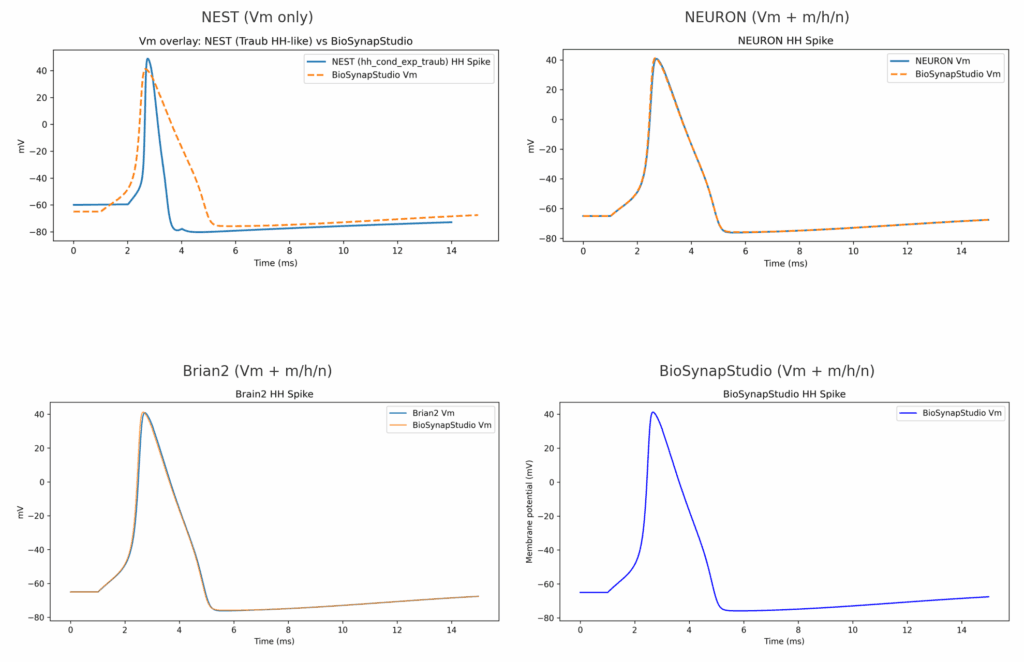

Our biologically faithful engine doesn’t generate reasoning trails as an afterthought. It operates as a deterministic state machine, where every update step is transparent, reproducible, and auditable. Instead of asking an AI to explain itself after the fact, we design it so that the explanation is inherent in the way it runs.

This creates three critical advantages for treasury governance:

- Auditability by design

Every spike, update, and decision emerges from well-defined, mechanistic rules. The logic is not reconstructed later; it is the process itself. - Hardware-ready primitives

Because our solver architecture maps cleanly to finite-state machines, it can be directly accelerated on FPGAs or ASICs. That means lower energy cost, higher throughput, and sovereign control without dependence on cloud black boxes. - Sustainable scaling

As complexity grows, the model doesn’t collapse under layers of retrofitted logic. It remains deterministic, traceable, and verifiable at scale — qualities that become more valuable the larger the treasury and the higher the stakes.

In short, where other projects offer “explainability wrappers,” Qognetix offers a transparent substrate. One is decoration; the other is architecture. And when the future of decentralised finance is on the line, only the latter can guarantee the trust DAOs require.

Why This Matters for Treasury Management

For DAOs, this isn’t an abstract technical debate — it cuts right to the heart of legitimacy. A treasury that can’t be proven trustworthy is a treasury that risks collapse, no matter how clever the agents managing it appear to be.

By embedding auditability directly into the substrate, Qognetix enables treasuries to operate with provable governance, not just plausible explanations. That means:

- Confidence for stakeholders – community members and investors can verify that allocations follow the DAO’s charter, instead of hoping that opaque AI reasoning is aligned.

- Institutional credibility – regulators, auditors, and potential partners can engage with DAOs on the basis of evidence, not black-box assurances.

- Scalable security – as treasuries grow from thousands into billions, oversight doesn’t need to scale linearly with human effort. Deterministic audit trails make verification efficient, even at extreme scale.

For a decentralised financial system that promises to be more open, fair, and resilient than traditional finance, this shift is not optional — it’s existential.

Qognetix doesn’t just improve decision-making; it secures the very foundation of decentralised financial governance.

The Road Ahead

AI-powered DAOs are not a thought experiment — they are emerging now. Communities are already experimenting with autonomous agents to manage liquidity, deploy capital, and evaluate proposals. The upside is enormous: always-on governance, rapid decision-making, and treasuries that can adapt in real time.

But so are the risks. If the intelligence behind those treasuries is a black box, then the very promise of decentralisation is undermined. We trade human opacity for algorithmic opacity — and that is no progress at all.

The opportunity, then, is to rethink what “intelligence” in DAOs should mean. Not just fast or efficient, but faithful, auditable, and sovereign. A treasury guided by agents whose every step can be traced and verified doesn’t just protect assets — it protects the legitimacy of decentralised governance itself.

This is the horizon Qognetix is building toward: a biologically faithful substrate where auditability is not added later but present from the first line of execution. Where hardware acceleration and deterministic primitives enable treasuries to scale sustainably, without drifting into unaccountable behaviours.

The question is no longer can AI run a DAO’s treasury. The question is how — and whether the systems we trust are built on hope, or on proof.